In Part 1 of this series, I examine how client-side tuning parameters impact NVMe over TCP (NVMe/TCP) performance with Lightbits, using a 4K 100% random read workload as the baseline.

In environments where the backend storage can sustain high NVMe/TCP performance — as is the case with Lightbits — the primary limiter often shifts away from the storage layer itself and onto how much work the client is driving and how effectively the Linux NVMe/TCP stack is parallelized.

This post walks through what I measured and how to tune nvme connect -i correctly.

Test environment

- Client: Single server, 100Gb NIC

- CPU governor set to performance to eliminate frequency scaling variability during testing

- Target: 3-node Lightbits cluster

- Volumes: 2 namespaces, RF=3 (replication factor)

- FIO Workload:

bs=4k

rw=randread

ioengine=libaio

direct=1

- What I varied:

- Total outstanding I/O = numjobs × iodepth

- NVMe/TCP I/O queues = nvme connect -i <queues>

- Total outstanding I/O = numjobs × iodepth

Outstanding I/O is the primary scaling knob

Before talking about queues, we need to look at how many I/Os the client is actually driving in parallel.

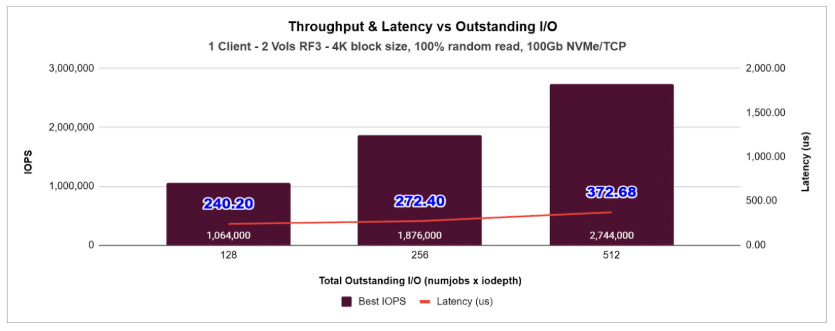

This chart shows the best-case throughput and latency as I increased the total outstanding I/O from 128 to 512.

Two things jump out immediately:

- Throughput scales nearly linearly across this range.

- Latency increases gradually but remains under 400µs, even as IOPS approach 2.7M.

This data shows that performance scales cleanly with increasing outstanding I/O across the 128–512 range, with throughput rising from ~1.06M to ~2.74M IOPS while latency remains under 400µs.

At 512 outstanding I/O, the system is approaching the effective packet-rate limits of a 100GbE link for 4K traffic, demonstrating that NVMe/TCP can efficiently consume available network bandwidth in this configuration.

For this workload, the efficient operating window spans 128 to 512 outstanding I/O — where concurrency drives predictable scaling while maintaining tightly controlled latency.

What does -i actually do?

The -i flag in:

nvme connect -t tcp … -i <queues>

controls how many NVMe/TCP I/O queues per target the client opens.

Each queue is its own TCP socket, submission path, and completion context. More queues mean more CPU parallelism when handling I/O completions.

Why latency drops when you increase -i

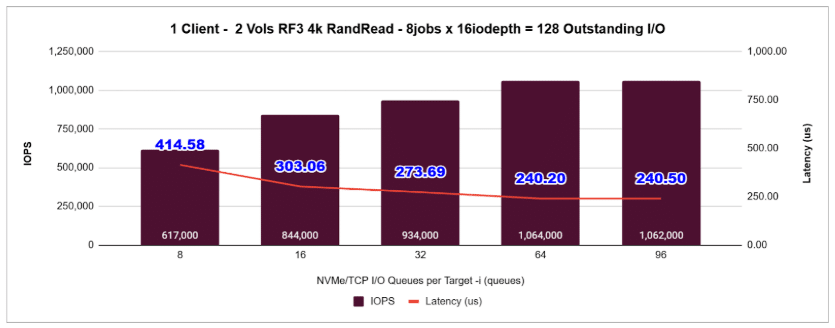

Here’s where things get interesting. Even at a moderate workload depth of 128 outstanding I/O, latency drops significantly as the number of NVMe/TCP I/O queues increases.

In this test, increasing -i from 8 to 64 reduced latency from approximately 415µs down to ~240µs, while throughput increased from ~617K to ~1.06M IOPS. The storage backend did not change — only the level of client-side queue parallelism did.

This behavior is driven by software queue contention in the Linux NVMe/TCP stack, not by storage limitations. With a small number of queues, in-flight I/Os are forced through a limited set of completion paths, creating serialization pressure in the networking and block layers. This artificially inflates latency even when the storage platform is capable of higher performance. You can observe this directly on the client using the ss utility to monitor the Recv-Q and Send-Q values for active NVMe/TCP connections on port 4420.

As the number of queues increases, I/O completion processing spreads across more CPUs and TCP sockets. That parallelism reduces software contention and collapses the excess latency, allowing the client to more efficiently consume the storage bandwidth available to it.

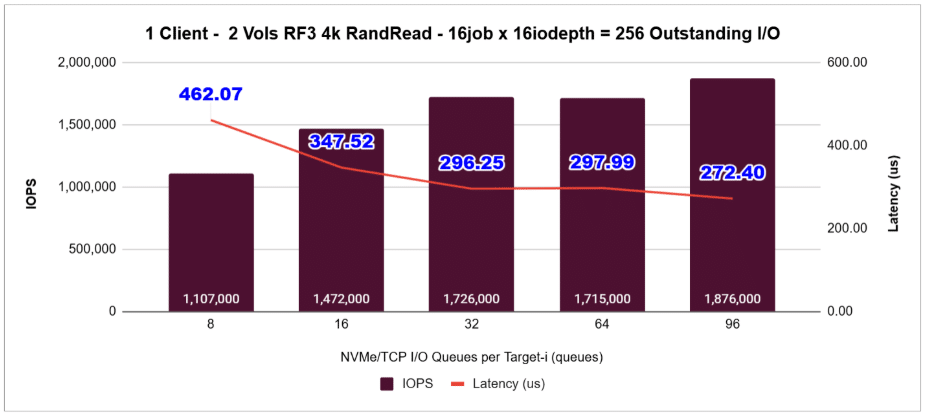

In this chart, increasing -i from 8 to 96 reduced latency by more than 42% while also increasing throughput — without changing the storage backend at all.

How to tune -i with Lightbits

Lightbits does not artificially cap the number of NVMe/TCP queues a client can open. By default, the client can use all available CPU cores for I/O processing.

But the -i flag lets you control how much CPU parallelism the storage stack consumes.

Here’s the guideline:

| Workload Behavior | Recommended -i |

|---|---|

| Low outstanding I/O (light workloads) | 8 – 32 |

| High outstanding I/O (latency sensitive) | 32 – 64 for typical high concurrency 64+ for very high core count systems |

| CPU-constrained client | Keep -i smaller |

| High-core-count server running heavy I/O | Increase -i aggressively |

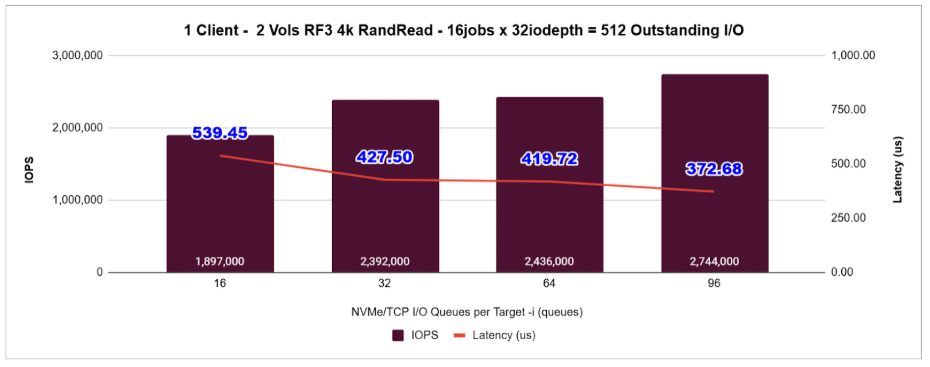

Additional data across outstanding I/O levels

To avoid overwhelming the main story, the remaining charts are shown below.

- 256 outstanding I/O

- 512 outstanding I/O

Across all these graphs, the pattern is consistent:

When the workload is deep enough, adding NVMe/TCP queues reduces software-induced latency and improves throughput.

Final takeaway

One of the strengths of Lightbits as an NVMe/TCP platform is that it does not artificially restrict client-side parallelism, leaving this fully under the customer’s control, because different workloads require very different levels of concurrency.

In practice, overall performance is governed by two simple levers:

- Total outstanding I/O determines how much work the system is driving at any moment.

- -i (NVMe/TCP I/O queues) determines how effectively the Linux NVMe/TCP stack can process that work in parallel.

When these two are aligned, NVMe/TCP delivers excellent throughput and competitive latency. The flexibility to tune queue parallelism is not a liability — it’s an advantage, and it allows Lightbits to adapt cleanly to everything from light, CPU-constrained applications to large, high-core-count servers running the heaviest I/O workloads.

What’s next

This post focused exclusively on a baseline 4K 100% random read workload to establish how outstanding I/O and NVMe/TCP queue parallelism interact on the client side.

In Part 2, I’ll apply the same methodology to 4K random writes and examine how the tuning guidance changes when write amplification, acknowledgements, and durability semantics are taken into account.

In Part 3, I’ll expand the analysis to larger block sizes to show when the workload transitions from CPU/packet-rate bound to bandwidth bound — and how that changes the optimal -i settings.

That should give a more complete, workload-driven tuning framework for NVMe/TCP with Lightbits.