Kubernetes, originally designed for container orchestration, has significantly expanded its capabilities. — it’s now the backbone for modern application platforms. From microservices to AI/ML pipelines, organizations are deploying workloads faster, in more places, and at greater scale than ever before. However, as Kubernetes takes on more stateful and performance-sensitive workloads, the underlying infrastructure becomes the determining factor in whether these deployments succeed or stall.

That’s where the combination of AMD EPYC™ processors and Lightbits NVMe/TCP software-defined storage comes in — delivering the speed, scalability, and operational simplicity Kubernetes needs to handle the next wave of enterprise workloads.

Kubernetes in the Real World

Kubernetes is flexible enough to run anywhere, but real-world production clusters are complex. They must:

- Serve both stateless and stateful applications without performance trade-offs.

- Scale seamlessly without overprovisioning.

- Handle mixed workloads — databases, analytics, virtual machines — on the same infrastructure.

Traditional storage solutions often require specialized networks or tight coupling with compute, which can limit flexibility and drive up costs. Meanwhile, compute nodes must deliver high throughput and parallel processing without bottlenecks.

A Compute + Storage Stack Designed for Kubernetes

With high core counts, large memory bandwidth, and advanced I/O capabilities, AMD EPYC processors provide Kubernetes clusters with the raw horsepower to run diverse workloads simultaneously. Whether it’s hundreds of microservices or VM-based applications through KubeVirt, EPYC CPUs provide the consistent performance foundation operators can count on.

Lightbits complements this by delivering NVMe performance over standard Ethernet networks — and it runs natively on AMD-based servers. This means organizations can standardize on a single AMD-powered infrastructure stack for both compute and storage, gaining the benefits of performance consistency, hardware efficiency, and simplified operations. With its NVMe/TCP implementation and native CSI driver, Kubernetes can dynamically provision and manage persistent volumes without friction — making stateful workloads as easy to deploy as stateless ones.

Together, they create a balanced, decoupled architecture where compute and storage scale independently, helping organizations avoid the “scale everything at once” trap.

Why It Matters in the Real World

Imagine a financial services firm running Kubernetes for both high-frequency trading and fraud detection analytics. The trading platform requires ultra-low latency, while the analytics pipeline needs throughput at scale. With AMD EPYC ensuring compute-intensive operations don’t starve I/O, and Lightbits delivering consistent NVMe-class performance to all pods, both workloads run without compromise.

Or consider a telco rolling out 5G core network functions on Kubernetes. Edge deployments benefit from EPYC’s compute density, while Lightbits provides the storage performance needed for real-time packet inspection and subscriber data services — all over the same Ethernet fabric, simplifying deployment at the edge.

Even in AI/ML environments, where training and inference can strain both compute and storage, the combination keeps data pipelines running smoothly. AMD EPYC processors handle parallel compute tasks efficiently, while Lightbits ensures the model training jobs never wait for data.

Our Test Bed: Hardware at a Glance

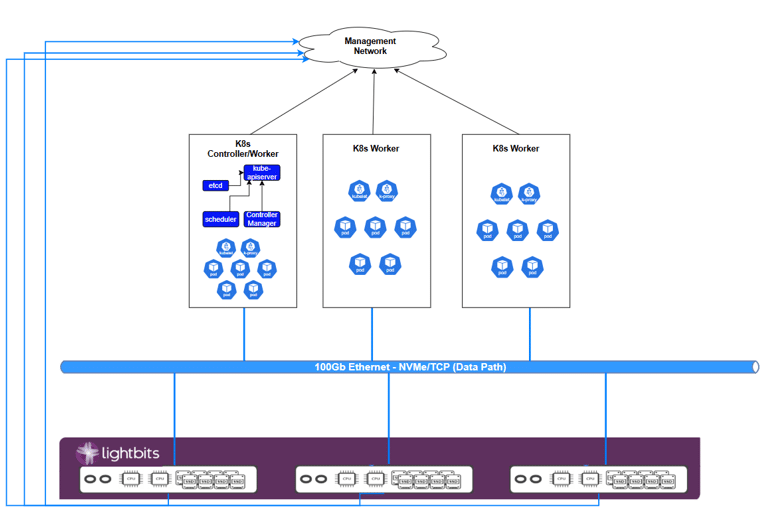

To validate this solution, we built a Kubernetes cluster using AMD-based servers for both compute and storage. Worker nodes ran containerized workloads, while Lightbits SDS provided NVMe/TCP storage on AMD-powered servers. This configuration reflects a practical, production-like environment where compute and storage scale independently.

The layout included:

- AMD-based control plane and worker nodes.

- Lightbits SDS cluster running on AMD-based hardware, connected via standard Ethernet.

This architecture ensured a consistent foundation for benchmarking Kubernetes workloads, while showcasing the benefits of an all-AMD compute + storage stack.

Performance Validation

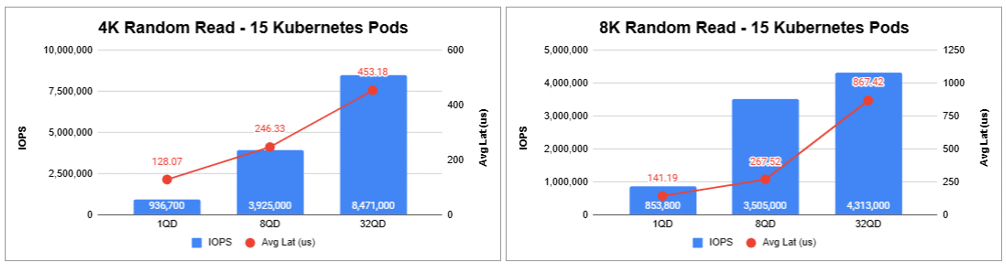

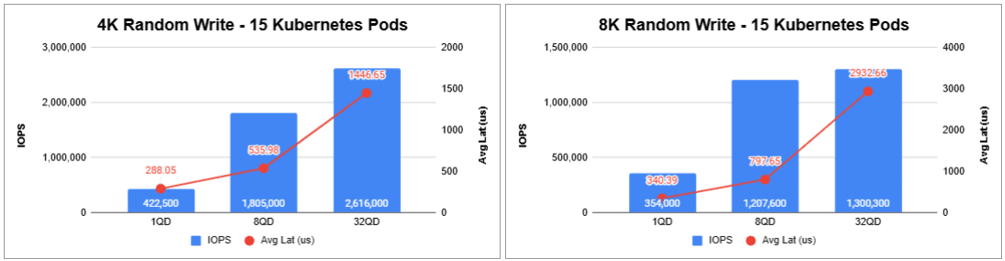

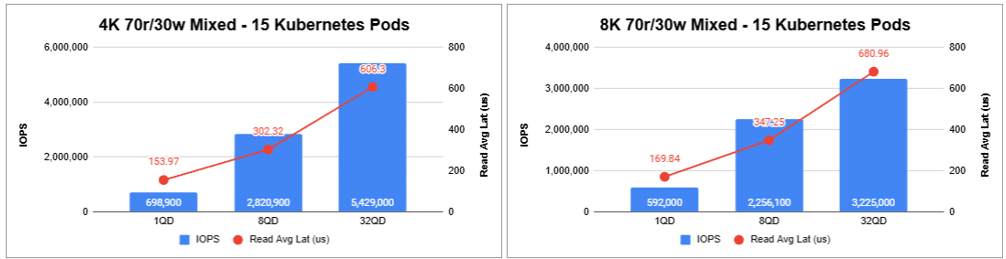

To validate this architecture, we ran a set of synthetic benchmarks using FIO, focusing specifically on 4K and 8K block sizes. These I/O profiles are commonly used as the most representative of real-world Kubernetes workloads such as transactional databases, virtualized applications, and mixed analytics.

Using AMD EPYC-powered worker nodes and Lightbits volumes provisioned through the CSI driver, we measured performance across three patterns:

- Random Read (read-intensive, database-like workloads)

- Random Write (logging and ingest-heavy applications)

- 70/30 Mixed Read/Write (a common balance for production systems)

The results showed:

- Strong linear scaling of IOPS as queue depth increased across both 4K and 8K workloads.

- Consistently low latency, even under write-heavy and mixed 70/30 scenarios.

Future-Proofing Your Kubernetes Stack

The Kubernetes ecosystem is evolving toward hybrid workloads, faster release cycles, and higher expectations for application responsiveness. As organizations modernize their platforms, choosing the right compute and storage pairing will make the difference between scaling smoothly and hitting performance ceilings.

With AMD EPYC processors and Lightbits NVMe/TCP storage, organizations get three big advantages:

- Room to grow — plenty of performance capacity for today’s workloads, with headroom for future demands.

- Flexible scaling — compute and storage can be expanded separately, so you only add what you need, when you need it.

- Built-in simplicity — it runs over standard Ethernet and plugs directly into Kubernetes, so deployment and management stay straightforward.

This isn’t just about keeping up with Kubernetes — it’s about building an infrastructure foundation that’s ready for whatever workloads come next.