Scale Services and Maximize Data Pipeline Performance with Lightbits Software-Defined Storage for AI

Lightbits offers unparalleled performance, efficiency, and scalability on which to build your AI and machine learning data platform. High-performance block storage accelerates data pre-processing and model training, speeds up real-time inference and checkpointing, and optimizes Retrieval-Augmented Generation (RAG) databases.

Lightbits block storage software surpasses petabyte levels and achieves up to 75M IOPS with consistent sub-millisecond tail latency, making it ideal for vector and other AI-centric databases. Harness the power of NVMe over TCP to accelerate your AI data pipeline and scale your services to new heights.

Lightbits Software Defined Storage Solutions for AI

Streamline Data Preprocessing

Streamline Data Preprocessing

Raw data is cleaned and transformed into a format that can be effectively utilized in machine learning models.

Accelerate Model Training

Accelerate Model Training

During the model training phase, data is frequently accessed and model parameters are continuously adjusted.

Efficient Real-time Inference

Efficient Real-time Inference

Real-time AI requires high speed storage to make instant predictions for fraud detection, autonomous vehicles, or translations.

Retrieval-Augmented Generation

Retrieval-Augmented Generation

The vector databases used in LLMs require high-performance storage to return RAG -customized results quickly for chatbots.

Database Optimization

Database Optimization

Databases require high peak performance whether they manage real-time AI application data or store training parameters and tags.

Scalability and Flexibility

Scalability and Flexibility

AI models and datasets are constantly growing and becoming more complex, so the underlying storage infrastructure must scale as well.

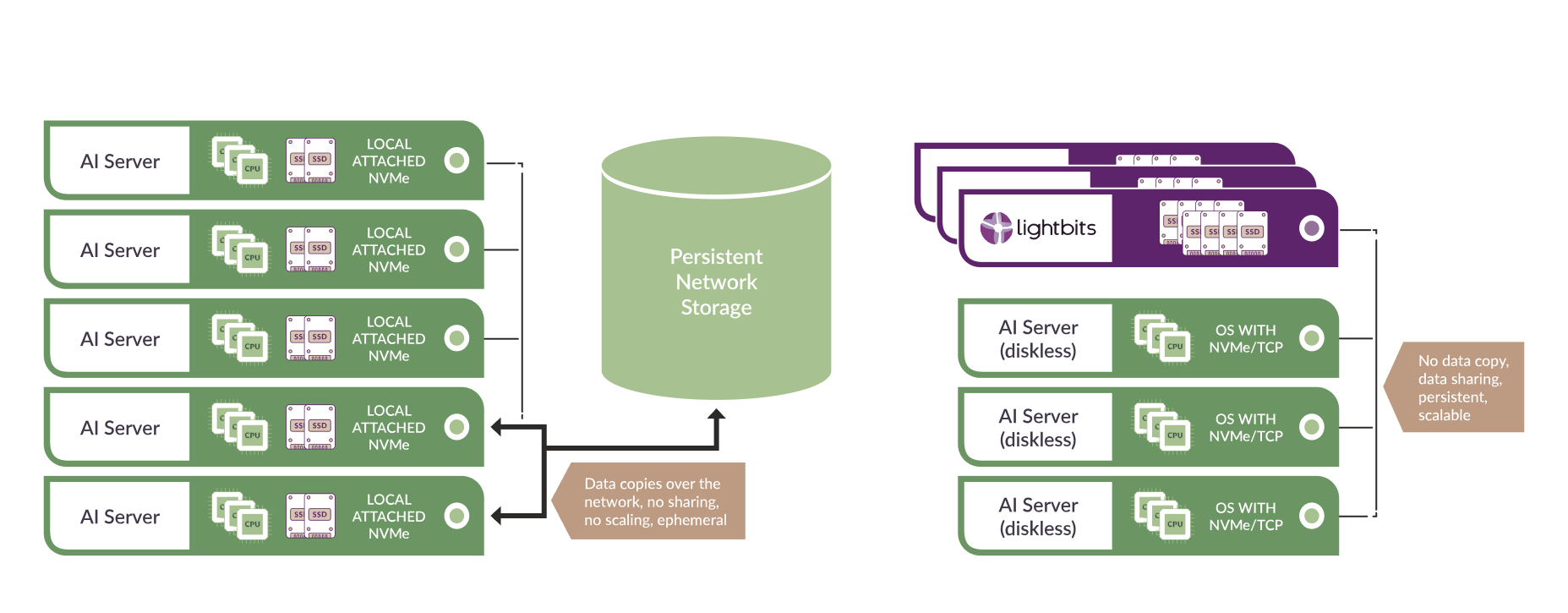

Software-Defined Storage for AI Clouds