Co-authored by: Alexander Tilgner, Sr. Solutions Architect at Boston Server and Storage Solutions GmbH

Drawn together by a common customer use case, Boston and Lightbits have teamed up to show the value that the Lightbits NVMe®/TCP Data Platform can bring to Boston’s customers. Following Lightbits VMware certification in 2021, Boston saw that Lightbits could fill a gap in the market, offering NVMe-level performance over regular TCP/IP, not needing Fiber Channel, RDMA, proprietary hardware, or Hyperconverged solutions.

The objective: offer the most cost-effective solution to the customer, while maintaining NVMe performance and low latency. The solution should also be multi-data center compatible and integrate with VMware and Microsoft File Services.

With that in mind, Boston Server and Storage Solutions in Germany and the Lightbits EMEA team ran a proof-of-value, (PoV) on hardware already available in Boston’s Feldkirchen lab. The system under test (SUT) consisted of 4 storage servers, 10- and 100GbE networking, and a vSphere host. Various performance and resiliency scenarios were run, making sure the solution would stand up to customers’ specifications, and also serve as a benchmark to be referenced for future discussions and other customers.

“Unlike other products, Lightbits excels in simplicity. If you like, simplicity by design. The advantage is obvious: the actual installation, for example, can be completed in a record time of around 15 minutes. One tends to doubt oneself whether that was really all. Yes, that’s all.” – Alexander Tilgner

Performance

The Lightbits cluster was run on servers with a pair of mid-level, previous-generation CPUs. (2x 24 cores, 2.2Ghz) We ran a VMware vSphere host in front of the cluster, which was based on a much older CPU, that is already 6 generations old architecture. For us, it was interesting to see how well the NVMe/TCP protocol is able to run on older generation hardware.

We set out to answer two questions:

- Many customer setups still use 10 Gbit/s network technology, but does NVMe over TCP/IP with Lightbits really add value in such environments?

- With regards to, the implementation and testing of the failover scenarios with Lightbits, most customers use a classic data center environment with two data centers connected via fiber with 10 or 100 Gbit/s. Ideally, a so-called transparent failover is desirable here. The application layer is usually not aware of a failover. And neither should the user be.

With native support from VMware for NVMe over TCP/IP, Lightbits makes traditional NAS file services on monolithic and expensive SAN arrays replaceable. The NAS filer part can be easily pushed into the VMware layer and the file services can be mapped in the familiar Windows environment. Nobody would even think of moving the mail service layer to the storage head, okay, maybe some companies could be trusted to do that.

But we digress, back to the questions. A VMware server with old CPU architecture was used in the SUT. The performance values are therefore to be interpreted in relation to the CPU architecture. The question, is the Lightbits solution also worthwhile for weaker/aging application server or hypervisor environments, or is it like shooting at sparrows with a cannon?

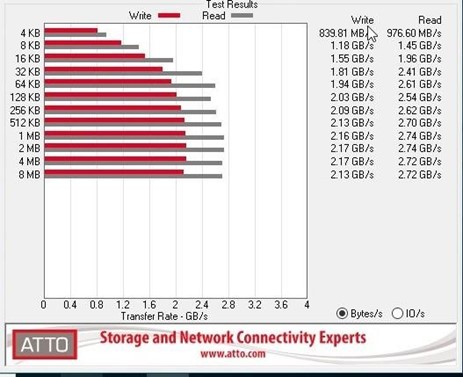

Never thought that this old CPU can handle such transfer rates. The Atto-Benchmark was done inside a Windows 2022 VM:

The transfer rate was achieved with 100 Gbit/s interconnects. If you are using 10 Gbit/s interconnects the maximum performance will be of course capped to line speed, so you can reach up to 1.18 GB/s.

Resiliency

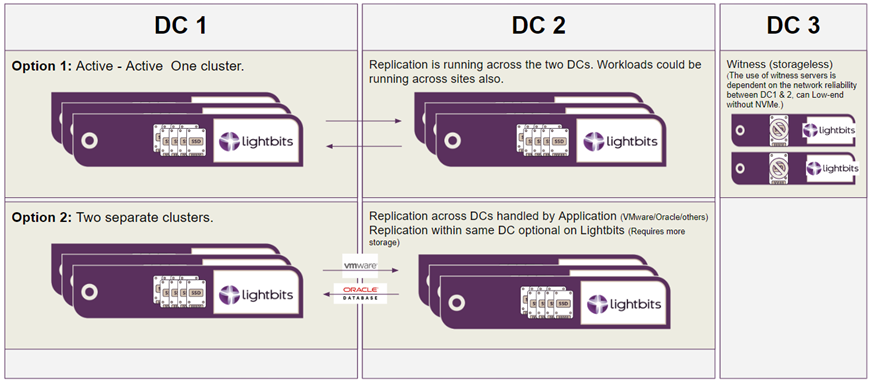

Before the start of the PoV, we had come up with the following deployment scenarios in this particular customer use-case.

Option 1: An experimental stretched cluster with witness functionality

Option 2: Two separate clusters with application-level replication (VMware & Oracle)

We decided to go with Option 1, one stretched cluster, with two nodes in each data center

The two Lightbits hosts per data center are combined in a so-called Failure domain:

FD1 = Data Center 1 with 2 Lightbits-Hosts

FD2 = Data Center 2 with 2 Lightbits-Hosts

This was the smallest possible configuration for a PoV, a production scenario would likely see three nodes in each Data Center. For such a setup you still need something like a quorum to avoid split brain scenarios and to allow automatic cluster decision making. It is also called a Witness and should ideally be operated on a so-called third (outside) site (DC3), with independent connectivity to both other DCs.

For the sake of simplicity during the PoV, this witness was quickly pushed as a VM on the VMware layer. In the real world, of course, the quorum or witness server should not be run in the same VMware environment, but in a separate environment, virtual or bare metal, and in a separate physical location.

“Of particular note is the Lightbits VMware vCenter plugin. After a while, you forget that this is a plugin, it’s like working with local storage all the time. Very cool.” – Alexander Tilgner

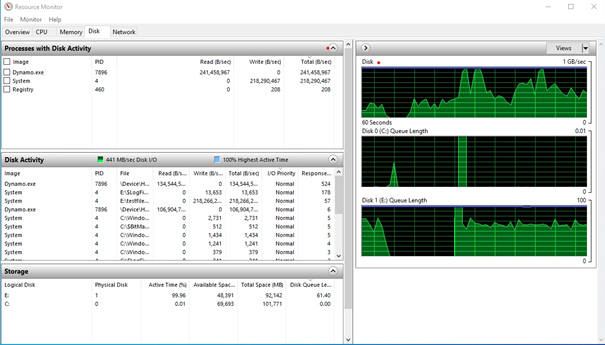

The failover tests went very well. The transparent failover was checked with a simple ‘diskdump’ (dd command) from a Linux VM pointed to a shared Windows 2022 directory. (CIFS)

Under a Linux VM you can use the following command to mount a CIFS share:

Within the Windows VM, you can see a so-called write gap in the task manager during the failover process.

Although no writes are displayed in the Windows Task Manager, the writing continues happily at the application level. Even when we simulated the failure of a complete data center or failure domain, the write speed on the application side is slightly reduced but no interruption will happen.

After the downed Lightbits nodes were online again, the write gap had closed:

Is there a significant difference between a single node or a site failover?

Yes of course. If a site fails (2 hosts down in the same failure domain, in this scenario), a significant increase in performance can be recognized in the Windows Task Manager.

This may seem confusing at first, but while the storage servers in the other site restart, the two remaining nodes only need to serve data to the clients and no longer have to replicate to the other failure domain.

Conclusion: Roughly twice the performance under Windows on the remaining two nodes.

“At this point, even a tired storage administrator should recognize that a failover process occurs. Double bandwidth means a failure domain is lost. Failover re-defined :D” – Alexander Tilgner

Concluding

Lightbits fits perfectly into Boston’s solution portfolio, for customers big and small.

It offers the functionality of enterprise products, but without the necessary ballast⸺easy to install and quick setup, intuitive, and transparent failover with end-to-end NVMe performance and latency. A truly new alternative to iSCSI or Hyper Converged solutions or any RDMA based solution. Wow!

The seamless integration into VMware, running on standard hardware and even on much older generations of CPUs, gets the maximum performance out. The Lightbits NVMe over TCP/IP storage solution did not show any errors or bugs throughout the tests.

Solutions for artificial intelligence (AI) applications and low-latency applications can thus be offered to Boston’s customers simply, efficiently, effectively, and without voodoo additives.